Our goal from the proposal is still the same: we want to make a simple and easy way to allow the user to farm. In order to run more trials in a shorter amount of time, we increased the probabilities of crop growth by changing the gamerule randomTickSpeed. We also decided to work on the project incrementally by breaking it down into phases.

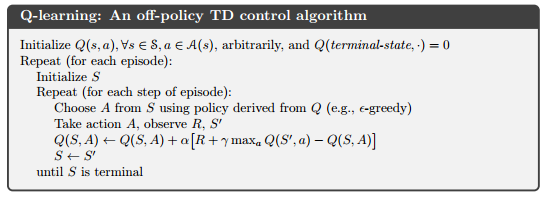

Our main algorithm:

States: For our starting farmland, we have a nxn block of land. Each block can be wheat or air (plant has already been harvested). There are 2^(nxn) possible states.

Actions: For each of the nxn possible cells, Steve can either wait (for the crops to grow) or harvest the crop at the specified cell. The next action to take is based on the epsilon-greedy policy.

Reward Function: Currently, the reward is based on the crop yield that the agent harvests and collects in its inventory. If the agent harvested the crop too early, then it will only get wheat seeds, leading to a negative reward. Waiting until the plant reaches full maturity will yield wheat, which results in a positive reward.

Phase 1: Harvesting

Goal:

Ensure that Steve can intelligently harvest the crops in a controlled environment (constant noon, light level constant, constant hydration levels, crops already planted in farmland)

Actions:

harvest(x,y,z) and wait.

Rewards:

- Harvest:

-1 * yield: wheat_seeds

+50 * yield: wheat - Wait:

-10: wait for the crop to grow

Estimated Max Reward: [64 wheat seeds (starting out) + 18 wheat seeds (harvested)] * -1 + 9 wheat (harvested) * 50 = 368

Phase 2: Planting

Goal:

Ensure that Steve can intelligently plant and harvest the crops in a controlled environment (constant noon, light level constant, constant hydration levels, hydrated farmland)

Actions:

plant(x,y,z), harvest(x,y,z) and wait.

Additional Rewards:

- Plant:

-10/crop: using precious food to make food

Estimated Max Reward: For a trial of 60 seconds (1 minute), there is an average case that a crop will grow to full maturity in about 8 seconds, so there’s 60/8 = 7.5 farming cycles with a possibility of 18 wheat seeds, 9 wheat, -9 * 10 (planting penalty) = 7.5 * (368 - 90) = 2085

Phase 3: Hoe-ing the land

Goal:

Ensure that Steve can intelligently hoe the land, and plant and harvest the crops in a controlled environment (constant noon, light level constant, constant hydration levels)

Actions:

hoe(x,y,z), plant(x,y,z), harvest(x,y,z) and wait.

Additional Rewards:

- Hoe:

-10: Once the land is hoe’d, it will remain hoe’d, but it is physically taxing

Estimated Max Reward: For a trial of 60 seconds (1 minute), assuming that the agent hoes the entire land at the beginning and that takes 0.5 farming cycles, there’s 7 farming cycles remaining with a possibility of 18 wheat seeds, 9 wheat, -9 * 10 (planting penalty) = -10 + 7 * (368 - 90) = 1936

Qualitatively, the project will solely be evaluated on how well Steve can harvest. It should know to wait for the crop to fully be able to be harvested. If Steve can achieve 90% of the expected max reward in that phase by the end of the iterations, it will be considered a success. If it cannot reach 90% of the expected max reward by the end of the iterations, we will continue to play around with the parameters in the Q-learning algorithm.

Current Lmitations:

Our prototype is very limited because we are doing the project in it’s most basic form. By doing it iteratively, we can ensure that we are doing the project correctly, and our current research and learning will help us a lot in the future. The first phase is the most difficult because there is a steep learning curve, but the rest of the phases should be a lot easier in theory.

Remaining goals:

- continue through phases

- read entire documentation about the possible functions

- improve methods for evaluation

Challenges

- balancing working on project along with other finals and weekly homework assignments.

- starting earlier on assignments and progress reports.

- current functions available do not give enough information for what we want to do. For example, we want Steve to learn how to harvest only when it is fully grown (carrot with age 7), but the observations do not allow for this functionality. It only shows that there are carrots in that block.

- unsure how to organize q-table. We need to organize it by the tiers for the best places to grow as well as the current state of the crop. Currently using the entire field as an entry.

- there are too many states to keep track of, so it makes training to the ideal state take a long time.

- lack of good documentation, examples, and online resources since the platform is relatively new.